Development Fuel: software testing in the large

by Adam Tornhill and Seweryn Habdank-Wojewodzki, July 2012

Introduction

As soon as a software project grows beyond the hands of a single individual, the challenges of communication and collaboration arise. We must ensure that the right features are developed, that the product works reliably as a whole and that features interact smoothly. And all that within certain time constraints. These aspects combined place testing at the heart of any large-scale software project.

This article grew out of a series of discussions around the role and practice of tests between its authors. It's an attempt to share the opinions and lessons learned with the community. Consider this article more a collection of ideas and tips on different levels than a comprehensive guide to software testing. There certainly is more to it.

Keeping knowledge in the tests

We humans are social creatures. Yes, even we programmers. To some extent we have evolved to communicate efficiently with each other. So why is it so hard to deliver that killer app the customer has in mind? Perhaps it's simply because the level of detail present in our average design discussion is way beyond what the past millenniums of evolution required. We've gone from sticks and stones to multi-cores and CPU caches. Yet we handle modern technology with basically the same biological pre-requisites as our pre-historic ancestors. Much can be said about the human memory. It's truly fascinating. But one thing it certainly isn't is accurate. It's also hard to back-up and duplicate. That's where documentation comes in on complex tasks such as software. Instead of struggling to maintain repetitive test procedures in our heads, we suggest relaying on structured and automated test cases for recording knowledge. Done right, well-written test cases are an excellent communication tool that evolves together with the software during its whole life-cycle. Large-scale software projects have challenges of their own. Bugs due to unexpected feature interactions are quite common. Ultimately, such bugs are a failure of communication. The complexity in such bugs is often significant. Not at least since the domain knowledge needed to track down the bug is often spread across different teams and individuals. Again, recording that domain knowledge in test cases makes the knowledge accessible.

Levels of test

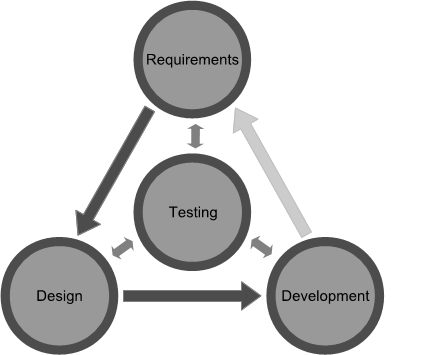

It's beneficial to consider testing at all levels in a software project. Different levels allow us to capture different aspects and levels of details. The overall goal is to catch errors as early as possible, preferably on the lowest possible level in the testing chain. But our division is more than a technical solution. It's a communication tool. The tests build on each other towards the user level. The higher up we get, the more we can involve non-technical roles in the conversation. Each level serves a distinct purpose:

- Unit tests are driven by implicit design requirements. Unit tests are never directly mapped to formal requirements. This is the technically most challenging level. It's impossible to separate unit tests from design. Instead of fighting it, embrace it; unit tests are an excellent medium and opportunity for design. Unit tests are written solely by the developer responsible for a certain feature.

- Integrations tests are where requirements and design meet. The purpose of integration tests is to find errors in interfaces and in the interaction between different units as early as possible. They are driven by use cases, but also by design knowledge.

- System tests are the easiest ones to formulate. At least from a technical perspective. System tests are driven by requirements and user stories. We have found that most well-written suites are rather fine-grained. One requirement is typically tested by at least one test case. That is an important point to make; when something breaks, we want to know immediately what it was.

The big win: tap into testers' creativity by automating

Testing in the figure above refers to any kind of testing. Among them are exploratory and manual tests. These are the ones that could make a huge qualitative difference; it's under averse conditions that the true quality of any product is exposed.

Thus the purpose of this level of testing is to try to break the software, exploiting its weaknesses, typically by trying to find unexpected scenarios. It requires a different mindset found in good testers; just like design, testing is a creative process. And if we manage to get a solid foundation by automating steps 1-3 above, we get the possibility to spend more time in this phase. As we see it, that's one of the big selling-points of automated tests.

The challenges of test automation

The relative success of a test automation project goes well behind any technical solutions; test automation raises questions about the roles in a project. It's all too easy to make a mental difference regarding the quality of the production code and the test code. It's a classic mistake. The test code will follow the product during its whole life cycle and the same aspects of quality and maintainability should apply here. That's why it's important to have developers responsible for developing these tools and frameworks. Perhaps even most test cases in close collaboration with the testers.

There's one caveat here though; far too many organizations aren't shaped to deal with cross-disciplinary tasks. We often find that although the developers have the skills to write competent test frameworks and tools, they're often not officially responsible for testing. In social psychology there's a well-known phenomenon known as diffusion of responsibility [DOR]. Simply put, a single individual is less likely to take responsibility for an action (or inaction) when others are present. The problem increases with group size and has been demonstrated in a range of spectacular experiments and fateful real-world events.

The social game of large-scale software development is no exception. When an organization fails to adequately provide and assign responsibilities, we're often left with an unmaintainable mess of test scripts, simulators and utilities since the persons developing them aren't responsible for them; they're not even the users. These factors combined prevent the original developers from gaining valuable feedback. At the end, the product suffers along with the organization.

Changing a large organization is probably one of the hardest tasks in our modern corporate world. We're better advised to accept and mitigate the problem within the given constraints. One simple approach is to put focus on mature test environments and/or frameworks. Either a custom self maintained framework or one of the shelf. A QA Manager should consider investing into a testing framework, to discipline and speed up testing. Especially if there already are existing test cases that shall be re-run over and over again. Such a task is usually quite boring and therefore error prone. Automating it minimizes the risks of errors due to human boredom. That's a double win.

System level testing

System level testing refers to requirements[REQ], user stories and use cases. Reading and analysing requirements, user stories and use cases is a vital part in the preparation of test cases. Use cases are very close to test cases. However their focus is more on describing how the user interacts with the system rather than specifying the input and expected results. That said, when there are good use cases and good test cases, they tend to be very close to each other.

Preparing test cases - the requirements link

With increasing automation the line between development and testing gets blurred; writing automated test cases is a development activity. But when developers maintain the frameworks, what's the role of the tester?

Well, let's climb the software hill and discuss requirements first. Requirements shall be treated and understood as generic versions of use cases. It is hard to write good requirements, but it is important to have them to keep an eye on all general aspects of the product. Now, on the highest level test cases are derived directly and in-directly from the requirements. That makes the test cases place holders for knowledge. It's the communicating role of the test cases. Well-written test cases can be used as the basis for communication around requirements and features. Increasingly, it becomes the role of the tester to communicate with Business Analysts[AKB] and Product Managers[AKP].

Once a certain requirement or user story has been clarified, that feedback goes into the test cases.

The formulation of test cases is done in close collaboration with the test specialists on the team. The tester is responsible for deciding what to test; the developer is responsible for how. In that context, there are two common problems with requirements; they get problematic when they're either too strict or too fuzzy. The following sections will explain the details and cures.

Avoiding too strict requirements

Some requirements are simply too strict, too detailed. Let's consider the following simple example. We want to write a calculator, so we write a requirement that our product shall fulfil the following: 2 + 2 = 4, 3 * 5 = 15, 9 / 3 = 3. How many such requirements shall we write? On this level of detail there will be lots of them (let's say infinite...). Writing test cases will immediately show that the requirements are too detailed. There is no generic statement capturing the abstraction behind, specifying what really shall be done. There are 3 examples on input and output. In a pathological case of testing we will write exactly 3 test cases (copy paste from requirements) and reduce the calculator to the look-up table that contains exactly those 3 rows with values and operations as above. It' may be a trivial example but it expands to all computing.

Further, for scalability reasons it's important to limit the number of test cases with respect to their estimated static path count. One such technique is to introduce Equivalence Classes for input data by Equivalence Class Partitioning [ECP]. That will help to limit number of tests for interface testing. ECP will also guide in the organization of the test cases by and dividing them in normal operation test cases, corner cases and error situations.

Test data based on the ECP technique makes up an excellent base for data-driven tests. Data-driven tests are another example on separating the mechanism (i.e. the common flow of operations) from the stimulus it operates on (i.e. the input data). Such a diversion scales well and expresses the general case clearer as well.

Cures for fuzzy requirements

Clearly too strict requirements pose a problem. On the other side of the spectrum we got fuzzy requirements. Consider a requirement like: "during start everything shall be logged". On the development team we might very well understand the gist in some concrete way, but our customer may have a completely different interpretation. Simply asking the customer: "How will it be tested?" may go a long way towards converting that requirement to something like: "During application start-up any information might be possible to log in the logger. Where any information means: start of the main function of the application and all its plug-ins.".

How did our simple question to the customer helped us sort out the fuzziness in the requirement? First of all "every" was transformed to "any". To get the conversation going we could ask the user if he/she is interested in every information like the spin of the electrons in CPU. Writing test cases or discussing them with the user often give us his perspective. Often, the user considers different information useful for different purposes. Consider our definition of "any" information above. Here "any" for release 1.0 could imply logging the start of the main function and plug-ins. We see here that such a requirement does not limit the possible extensions for release 2.0.

The discussion also helped us in clarifying what "logged" really meant. From a testing point of view we now see that test shall consider the presence of the logger. And later requirements may precisely define the term logger and how the logs looks like. Again requirements about the shape of the logs shall be verified by proper test cases and by keeping the customer in the loop. Preparing the test case may guide the whole development team towards a very precise definition of logs.

Consider another real-world example from a product that one of the authors was involved in. The requirements for that product specified that transactions must be used for all operations in the database. That's clearly not something the user cares about (unless we are developing an API for a database...). It's a design issue. The real requirements would be something related to persistent information in the context of multiple, concurrent users and leave the technical issues to the design without specifying a solution.

One symptom of this problem is requirements that are hard to test. The example above ended up being verified by code inspection - hard to automate, and hard to change the implementation. Say we found something more useful than a relational database. As long as we provide the persistency needed, it would be perfectly fine for the end-user. But, such a design change would trigger a change in the requirements too.

Finally some words on Agile methodologies since they're common place these days. Agile approaches may help in test preparation as well as in defining the strategy, tools and writing test cases. The reason Agile methodologies may facilitate these aspects is indirect through the potentially improved communication within the project. But, the technical aspects remain to be solved independent of the actual methodology. Thus, all aspects of the software product has to be considered anyway from a test perspective; shipping, installation process, quality of documentation (which shall be specified in requirements as well) and so on.

Traceability

In safety-critical applications traceability is often a mandatory requirement in the regulatory process. We would like to stress that traceability is an important tool on any large-scale project. Done right, traceability is useful as a way to control the complexity, scale and progress of the development. By linking requirements to test cases we get an overview of the requirements coverage. Typically, each requirement is verified by one or more test cases. A requirement without test(s) is a warning flag; such a requirement is often useless, broken or simply too fuzzy.

From a practical perspective it's useful to have bi-directional links. Just like we should be able to trace a requirement to its test cases, the test cases should explain which requirement (or parts of it) it tests. Bi-directional traceability is of vital importance when preparing or generating test reports.

Such a link could be as simple as a comment or magical tag in each test case, it could be an entry in the test log, or the links could be maintained by one of the myriad of available tools for requirements tracing.

Design of test environments

Once we understand enough of the product to start sketching out designs we need to consider the test environments. As discussed earlier, we recommend testing on different complementary levels. With respect to the test environment, there may well be a certain overlap and synergies that allow parts to be shared and re-used across the different test levels. But once we start moving up from the solution domain of design (unit tests) towards the problem domain (system and acceptance tests), the interfaces change radically. For example, we may go from testing a programmatic API of one module with unit tests to a fully-fledged GUI for the end-user. Clearly, these different levels have radically different needs with respect to input stimulation, deployment and verification.

Test automation on GUI level

In large-scale projects automatic GUI tests are a necessity. The important thing is that the GUI automation is restricted to check the behaviour of the GUI itself. It's a common trap to try to test the underlying layers through the GUI (for example data access, business logic). Not only does it complicate the GUI tests and make the GUI design fragile to changes; it also makes it hard to inject errors in the software and simulate averse conditions.

However, there are valid cases for breaking this principle. One common case is when attempting to add automated tests to a legacy code base. No matter how well-designed the software is, there will be glitches with respect to test automation (e.g. lack of state inspection capabilities, tightly coupled layers, hidden interfaces, no possibility to stimulate the system, impossible to predictably inject errors). In this case, we've found it useful to record the existing behaviour as a suite of automated test cases. It may not capture every aspect of the software perfectly, but it's a valuable safety-net during re-design of the software.

The test cases used to get legacy code under test are usually not as well-factored as tests that evolve with the system during its development. The implication is that they tend to be more fragile and more inclined to change. The important point is to consider the tests as temporary in their nature; as the program under test becomes more testable, these initial tests should be removed or evolve into formal regression tests where each test cases captures one, specific responsibility of the system under test.

Integration defines error handling strategies

In large-scale software development one of the challenges is to ensure feature and interface compatibility between sub-systems and packages developed by different teams. It's of vital importance to get that feedback as early as possible, preferably on each committed code change. In this scope we need to design all tests to be sure that all possible connections are verified. The tests shall predict failures and test how one module will behave in case another other module fails. The reason is twofold.

First, it's in averse conditions that the real quality of any software is brutally exposed; we would be rich if given a penny for each Java stack trace we've seen in live systems on trains, airports, etc. Second, by focusing on inter-module failures we drive the development of an error handling strategy. And defining a common error handling policy is something that has to be done early on a multi-team software project. Error handling is classic example on cross-cutting functionality that cannot be considered locally.

Simulating the environment

Quite often we need to develop simulators and mock-ups as part of the test environment. Having or being able to have mock-ups will detect any lack of interfaces, especially when mock objects or modules has to be used instead of real ones. Further, simulators allow us to inject errors in the system that may be hard to provoke when using the real software modules.

Finally, a warning about mock objects based on hard-earned experience. With the increase in dynamic features in popular programming languages (reflection, etc) many teams tend to use a lot of mocks at the lower levels of test (unit and integration tests). That may be all well. Mocks may serve a purpose. The major problem we see is that mocks encourage interaction testing which tends to couple the test cases to a specific implementation. It's possible to avoid but any mock user should be aware of the potential problems.

Programming Languages for Testing

The different levels of tests introduced initially are pretty rough. Most projects will introduce more fine-grained levels. If we consider such more detailed layers of testing (e.g. acceptance testing, functional testing, production testing, unit testing) then except for unit testing, the most important part here is to separate the language used for testing from the development language. There are several reasons for this.

The development language is typically selected due to a range of constraints. These may be due to regulatory requirements in safety or medical domains, historical reasons, efficiency, or simply due to the availability of a certain technology on the target platform. In contrast, a testing language shall be as simple as possible. Further, by using different languages we enable cross-verification of the intent which may help in clarifying the details of the software under test. Developers responsible for supporting testing shall prepare high level routines that can be used by testers without harm for the tested software. It can be either commercial tools [LMT] or open sources [OMT].

When capturing test case we recommend using a formal language. In system or mission critical SW development there are formal processes built around standards like DO-178B and similar. In regular SW development using an automated testing framework forces developers to write test specifications in a dedicated high-level language. Most testing tools offer such support. This is important since formal language helps in the same way as normal source code. It can be verified, executed and is usually expressive in the test domain. If it is stored in plain text then comparison tools may help to check modifications and history. More advanced features are covered by Test Management tools.

TDD, unit tests and the missing link

A frequent discussion about unit tests concern their relationship to the requirements. Particularly in Test-Driven Development (TDD)[TDD] where the unit tests are used to drive the design of the software. With respect to TDD, The single most frequent question is: "how do I know the tests to write?" It's an interesting question. The concept of TDD seems to trigger something in peoples mind; something that the design process perhaps isn't deterministic. It particularly interesting since we rarely hear the question "how do I know what to program?" although it is exactly the same problem. As we answer something along the lines that design (as well as coding) always involves a certain amount of exploration and that TDD is just another tool for this exploration we get, probably with all rights, sceptical looks. The immediate follow-up question is: "but what about the requirements?" Yes, what about them? It's clear that they guide the development but should the unit tests be traced to requirements?

Requirements describe the "what" of software in the problem domain. And as we during the design move deeper and deeper into the solution domain, something dramatic happens. Our requirements explode. Robert L. Glass identifies requirements explosion as a fundamental fact of software development: "there is an explosion of "derived requirements" [..] caused by the complexity of the solution process" [GLA]. How dramatic is this explosion? Glass continues: "The list of these design requirements is often 50 times longer than the list of original requirements" [GLA]. It is requirements explosion that makes it unsuitable to map unit tests to requirements; in fact, many of the unit tests arise due to the "derived requirements" that do not even exist in the problem space!

Avoid test dependencies on implementation details

Most mainstream languages have some concept of private data. These could be methods and members in message-passing OO languages. Even the languages that lack direct language support for private data (e.g. Python, JavaScript) tend to have established idioms and conventions to communicate the intent. In the presence of short-term goals and deadlines, it may very well be tempting to write tests against such private implementation details. Obviously, there's a deeper issue with it; most testers and developers understand that it's the wrong approach.

Before discussing the fallacies associated with exposed implementation details, let's consider the purpose of data hiding and abstraction. Why do we encapsulate our data and who are we protecting it from? Well, it turns out that most of the time we're protecting our implementations from ourselves. When we leak details in a design we make it harder to change. At some point we've probably all seen code bases where what we expected to be a localized change turned out to involve lots of minor changes rippling through the code base. Encapsulation is an investment into the future. It allows future maintainers to change the how of the software without affecting the what.

With that in mind, we see that the actual mechanisms aren't that important; whether a convention or a language concept, the important thing is to realize and express the appropriate level of abstraction in our everyday minor design decisions.

Tests are no different. Even here, breaking the seal of encapsulation will have a negative impact on the maintainability and future life of the software. Not only will the tests be fragile since a change in implementation details may break the tests. Even the tests themselves will be hard to evolve since they now concern themselves with the actual implementation which should be abstracted away.

That said, it may well exist cases where a piece of software simply isn't testable without relaying on and inspecting private data. Such a case is actually a valuable feedback since it often highlights a design flaw; if something is hard to test we may have a design problem. And that design problem may manifest itself in other usage contexts later. As the typical first user of a module, the test cases are the messenger and we better listen to him. Each case requires a separate analysis, but we've often found one of the following flaws as root cause:

- Important state is not exposed - perhaps we shall think about some state of the module or class that shall be exposed in a kind of invariant way (e.g. by COW, const).

- Class/Module is complicated with overly strong coupling.

- The interface is too poor to write essential test cases.

- A proper bridge (or in C++ pimpl) pattern is not used to really hide private details that shall not be visible. In this case it's simply a failure of the API to communicate by separating the public from the hidden parts.

Coping with feedback

As a tester starts to write test cases expected to be run in an automated way he will usually detect anomalies, asymmetric patterns and deviations in the code. Provided coding and testing are executed reasonably parallel in time, this is valuable feedback to the developer. On a well-functioning team, the following information would typically flow back to the designers of the code:

- Are there any missing interfaces? Or are there perhaps too many interfaces bloating the design?

- Is it intended like that?

- Is the SW conceptually consistent?

- Are the differences between similar methods documented and clearly expressed?

Since the test cases typically are the first user of the software they are likely to run into other issues that have to be addressed earlier rather than becoming a maintenance cost. One prime example is the instantiation of individual software components and systems. The production and test code may have different needs here. In some cases, the test code has to develop mechanisms for its own unique needs, for example factory objects to instantiate the components under test. In that case, the tester will immediately detect flaws and complicated dependency chains.

Automatic test cases has another positive influence on the software design. When we want to automate efficiently, we will have to separate different responsibilities. This split is typically done based on layers where each layer takes us one step further towards the user domain. Examples on such layers include DB access, communication, business logic and GUI. Another typical example involves presenting different usage views, for example providing both a GUI and a CLI.

Filling data into classes or data containers

This topic brings many important design decision under consideration. Factories but in general construction of the SW is always tricky in terms of striking a balance between flexibility and safety. Let's consider a simple example class, Authentication. Let's assume the class contains two fields: login and password. If we will start to write test cases to check access using that class we could arrive at a table with the following test data: Authentication = {{A,B},{C,D},{E,F},{G,H},{I,J}}. If the class has two getters (login, password) and two setters (similar ones), it is very likely that we do not need to separate login and password. Changing login usually forces us to change password too. What about having two getters and one setter that takes two arguments and one constructor with two arguments? Seems to be good simplification. It means that by preparing the tests, we arrived at suggested improvements in the design of the class.

Gain feedback from code metrics

When testing against formal requirements the initial scope is rather fixed. By tracing the requirements to test cases we know the scope and extent of testing necessary. A more subjective weighting is needed on lower levels of test. Since unit tests (as discussed earlier) are written against implicit design requirements there's no clear test scope. How many tests shall we write?

Like so many other quality related tools, there's a point of diminishing return with unit tests. Even if we cover every corner of the code base with tests there's absolutely no guarantee that we get it right. There are just too many factors, too many possible ways different modules can interact with each other and too many ways the tests themselves may be broken. Instead, we recommend basing the decision on code metrics.

Calculating code metrics, in particular cyclomatic complexity and estimated static path count [KAC], may help us answer the question for a particular case. Code Complexity shows the minimal number of test actions or test cases that shall be considered. Estimate Static Path Count on the other hand shows a kind of maximal number (true maximal number is quite often infinity). It means that tools which calculates code metrics point to areas that need improvement as well as how to test the code. Basically, code metrics highlight parts of the code base that might be particularly tricky and may require extra attention. Note that these aspects are a good subject for automation. Automatic tests can be checked against coverage metrics and the code can be automatically checked with respect to cyclomatic complexity. Just don't forget to run the metrics on the test code itself; after all, it's going to evolve and live with the system too.

Summary

Test automation is a challenge. Automating software testing requires a project to focus on all areas of the development, from the high-level requirements down to the design of individual modules. Yet, technical solutions aren't enough; successful test automation requires a working communication and structured collaboration between a range of different roles on the project. This article has touched all those areas. While there's much more to write on the subject, we hope our brief coverage may serve as a starting-point and guide on your test automation tasks.

Bibliography

[AKB] Allan Kelly, On Management: The Business Analyst's Role

[AKP] Allan Kelly, On Management: Product Managers

[APT] Adam Tornhill, The Roots of TDD

[DOR] Diffusion of Responsibility

[ECP] Equivalence Partitioning

[GLA] Robert L. Glass, Facts and Fallacies of Software Engineering

[LMT] List of notable test management tools

[OMT] Open Source Testing

[REQ] Requirements

[RQA] Requirements Analysis

[TDD] Test-Driven Development